How to Train AI Models to Detect Artifact Mentions in Old Maps and Atlases

Introduction

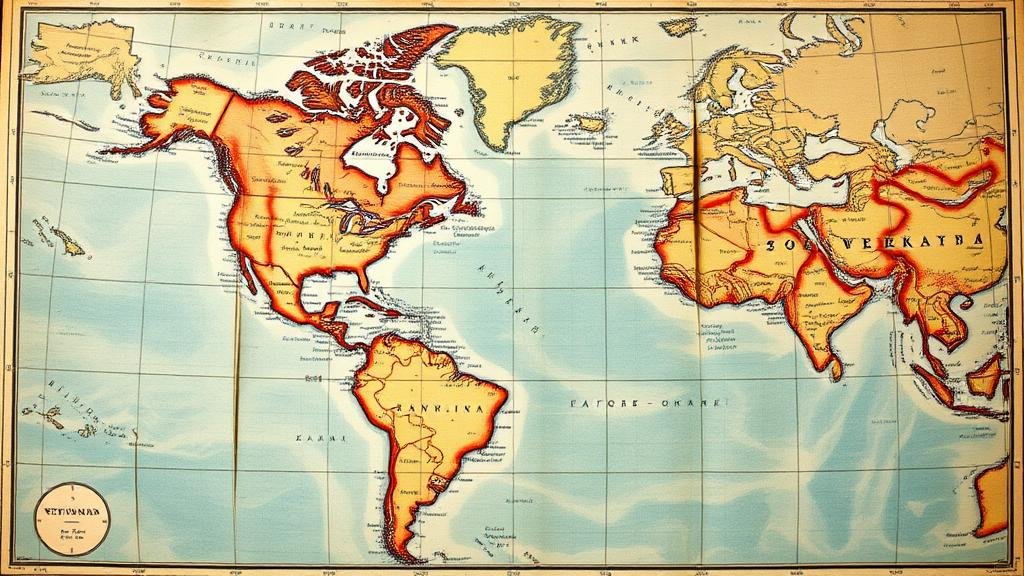

The study of historical maps and atlases provides valuable insights into the socio-cultural, political, and geographical landscapes of the past. These artifacts not only serve as visual representations of historical contexts but also contain significant textual and symbolic references. In recent years, the integration of artificial intelligence (AI) has revolutionized the way researchers can analyze these documents. This article explores methodologies for training AI models to effectively detect artifact mentions in historical maps and atlases, facilitating enhanced data extraction and archiving for future research.

The Importance of Detecting Artifact Mentions

Detecting artifact mentions in old maps and atlases is essential for several reasons:

- Textual Analysis: The written content on maps often includes place names, historical events, and cultural references relevant to specific artifacts.

- Preservation of History: By accurately identifying and cataloging these mentions, researchers can preserve important historical narratives.

- Interdisciplinary Research: Artifact mentions enable collaboration across disciplines including archaeology, history, and geography.

Methodologies for Training AI Models

Step 1: Data Collection

The first step in training AI models is gathering a comprehensive dataset of maps and atlases. A well-curated dataset should include:

- Scanned images of historical maps, preferably labeled for mentions of artifacts.

- Text transcriptions that accompany the maps, capturing the intricate details of any mentions.

- Metadata context, including the geographical location, date of the map, and the archaeologically relevant details.

For example, the Library of Congress hosts a vast collection of maps that can serve as a primary source for data collection.

Step 2: Text Preprocessing

Once the data is collected, it must undergo preprocessing to facilitate effective AI training:

- Text Recognition: Use Optical Character Recognition (OCR) to convert image text into machine-readable formats. Tools such as Tesseract or Google Vision API are valuable in this step.

- Normalization: Clean and normalize the text to rectify issues like font inconsistencies, different historical spellings, and typographical errors.

For example, a map from 1700 might use the term “New Amsterdam†instead of “New York,†necessitating a normalization strategy to ensure AI recognition.

Step 3: Model Selection

Choosing the right model is critical to successfully detect mentions of artifacts. Common approaches include:

- Natural Language Processing (NLP) Models: Pre-trained NLP models like BERT or GPT-3 can be fine-tuned on your dataset to improve contextual understanding.

- Convolutional Neural Networks (CNNs): For image analysis, CNNs can help identify visual artifacts associated with textual mentions.

For example, a hybrid model utilizing both CNNs for image recognition and NLP for text analysis can significantly enhance detection accuracy.

Step 4: Training the Model

During the training phase, it is essential to:

- Use a labeled dataset to train the model effectively, ensuring it learns the context of artifact mentions.

- Use regularization techniques to prevent overfitting and ensure the model generalizes well to unseen data.

Data augmentation techniques, such as rotating or flipping images, can increase the robustness of the model, especially when working with limited datasets.

Step 5: Evaluation and Refinement

Post-training, it is crucial to evaluate the models performance using metrics like precision, recall, and F1 score. Continuous refinement through feedback loops helps to:

- Identify common errors in detection.

- Incorporate additional datasets or domain-specific annotations to enhance model capabilities.

For example, models trained on historical cartography can greatly benefit from annotations made by historians or geographers to increase contextual relevance.

Real-World Applications

The ability to detect artifact mentions in old maps harnessed worldwide. For example:

- The British Library: Their project efforts utilize AI to analyze historical documents, generating valuable insights for cultural heritage preservation.

- Geo-Referenced Historical Data: Civil engineering firms are using AI models to detect boundaries and infrastructure changes in historical maps, aiding in urban planning.

Conclusion

The training of AI models to detect artifact mentions in old maps and atlases holds significant potential for the fields of history, archaeology, and cultural studies. Through meticulous data preparation, methodical training, and rigorous evaluation, researchers can develop robust models capable of transforming our understanding of historical artifacts. The implications of such advancements extend beyond academic research, offering significant contributions to educational initiatives and cultural preservation efforts globally.